Regulatory compliance

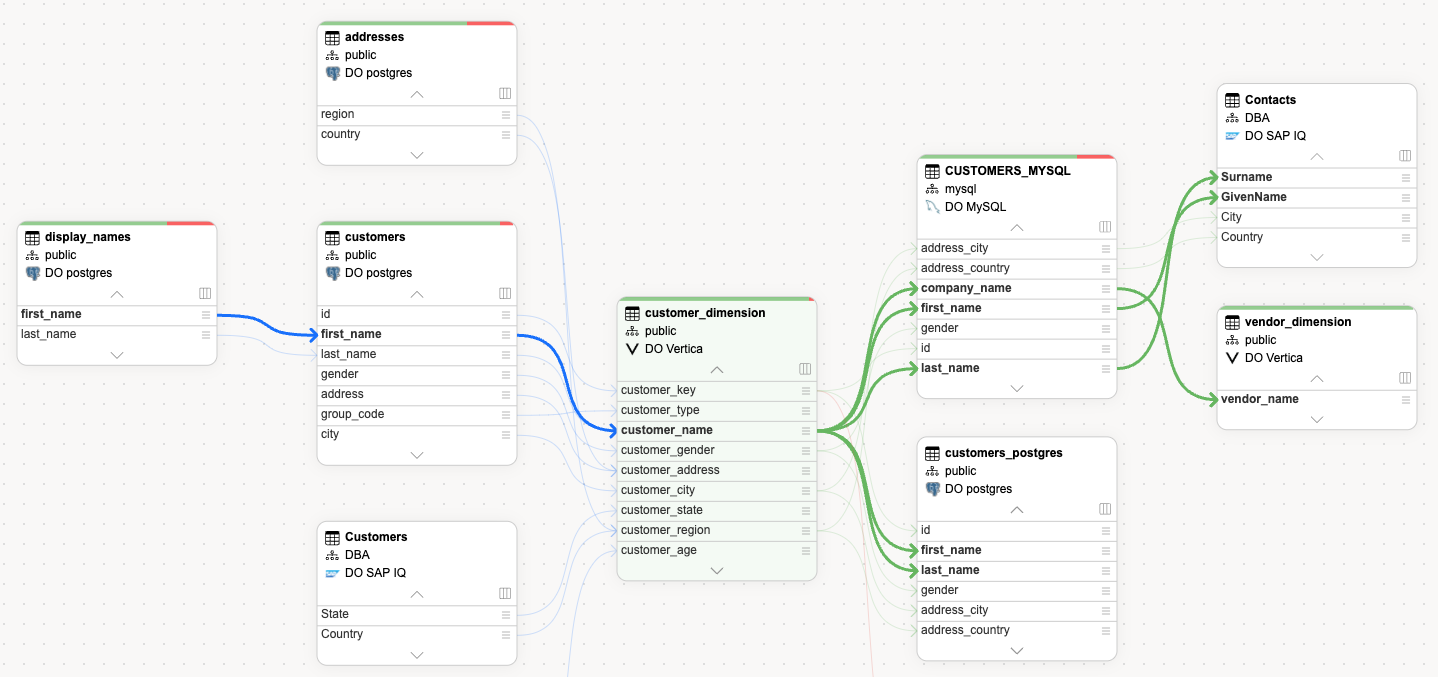

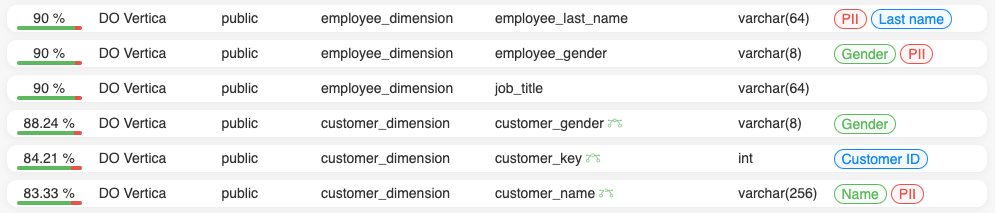

Improve compliance with regulations such as BCBS 239, GDPR, DORA, AI & data governance acts .

Analytics & reporting

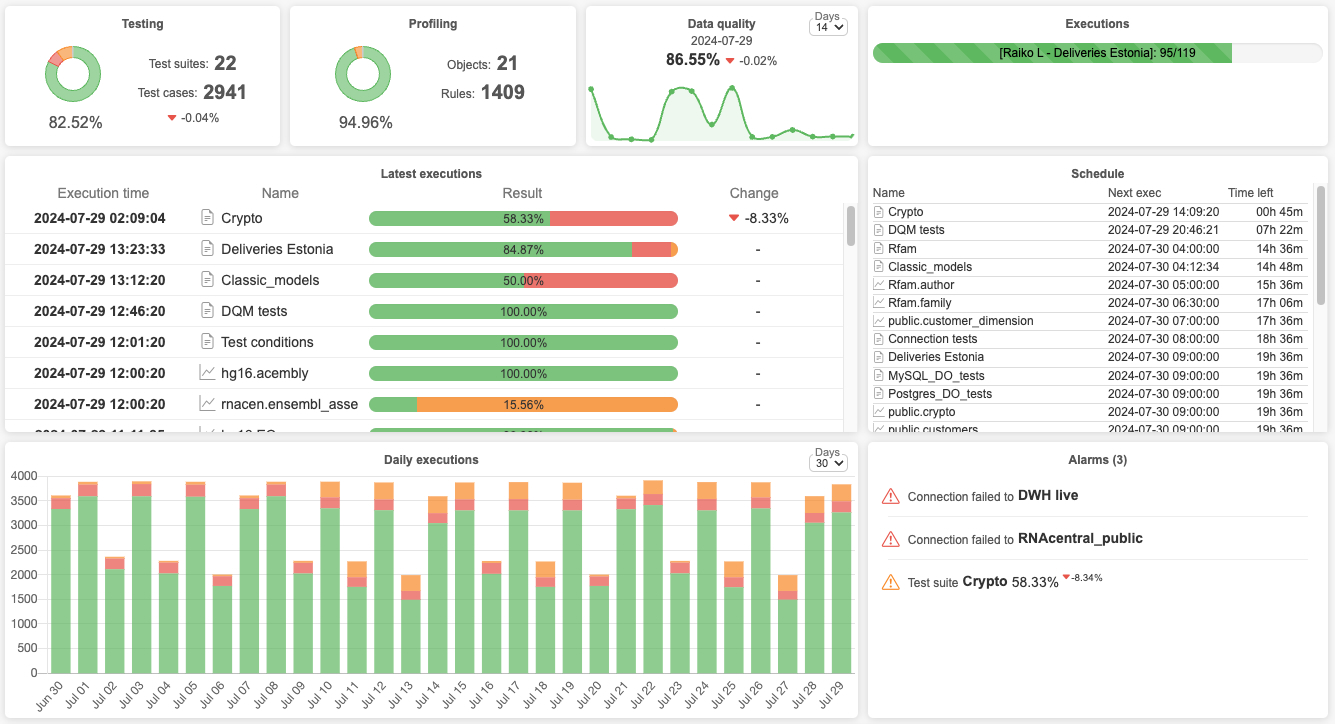

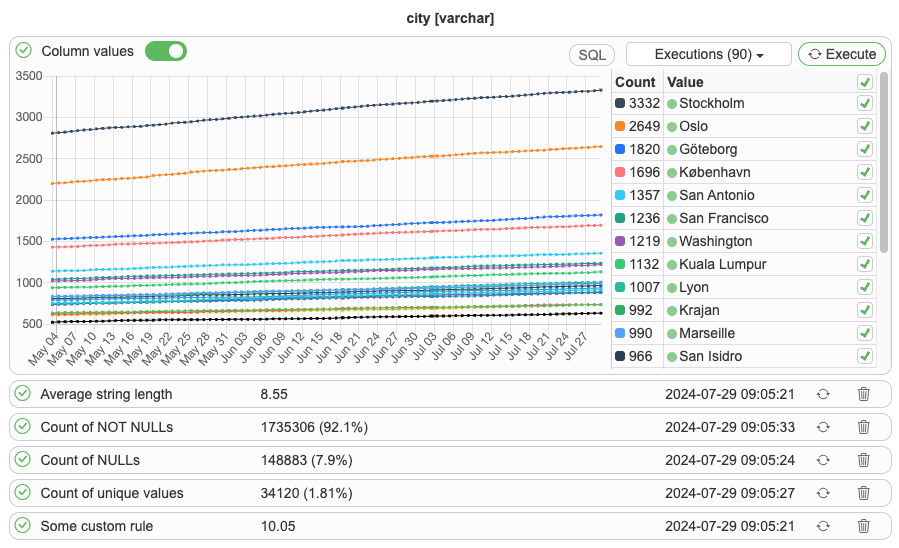

Ensure accurate insights to enable data-driven decisions and avoid negative impact on business outcomes.

Data-driven initiatives

Deliver impactful data products (e.g. AI & ML) by ensuring data quality and reliability.

Operational efficiency

Reduce costs, improve productivity, and optimize processes by applying efficient data observability.

Our customers

Bringing our users the best solution to ensure data quality & reliability

“Our collaboration with SelectZero has been smooth and productive. When implementing new solutions, our main goal is for them to help optimize resource usage. The integration of SelectZero’s platform simplifies banking processes and enables us to make even more detailed and personalised decisions based on the data we have.”

Andrus Tamm, Head of Product Development and Technology Area

“SelectZero’s data quality improvement tool is user-friendly and intuitive, helping to streamline our approach to data management. The vendor’s clear communication helped us explore data challenges from new angles, making collaboration smooth and insightful.”

Andris Vedigs, Innovation Lead at Rimi

“The SelectZero platform, while simple and user-friendly, offers the most crucial data quality monitoring capabilities. It is developing rapidly, with new features being added constantly. As a client, we appreciate having a say in development to ensure it aligns with our needs. Additionally, the responsive support provided by the SelectZero Team has been invaluable. “

Anastasija Kravceva, Head of Data Architecture and Delivery at Citadele

Integrations

Data warehouses:

- Apache Hive

- BigQuery

- ClickHouse

- Databricks

- Redshift

- SAP IQ

- Snowflake

- Teradata

- Vertica

Databases:

- Exasol

- MariaDB

- Microsoft SQL Server

- MySQL

- Oracle

- OpenEdge Progress

- PostgreSQL

- SAP Hana

- SQLite

Other:

- CSV files

- Excel files

- Salesforce

- Sharepoint

- REST API endpoints

Business Intelligence:

- Power BI

- Tableau

Notifications:

- Slack

- Teams

- Jira

- Custom APIs